I lost faith and I wasn’t alone.

I’ve been working as a content writer for almost six years now. Writing paid my bills, and honestly, it’s the coolest career choice I could’ve imagined.

But last year, I shared a LinkedIn post about my struggle — the uncertain environment created by all the “AI is coming for your job” chaos and the “this is the end for B2B writers” panic.

I almost made up my mind to quit writing. I even spoke to people in the industry — writers who weren’t in the top 1% — and most of them mentioned two things: first, they weren’t getting enough work; second, they were trying to transition into other careers. Even the top 1% folks were somewhat pessimistic — or at least self-doubt was lurking in, quietly.

So what happened now?

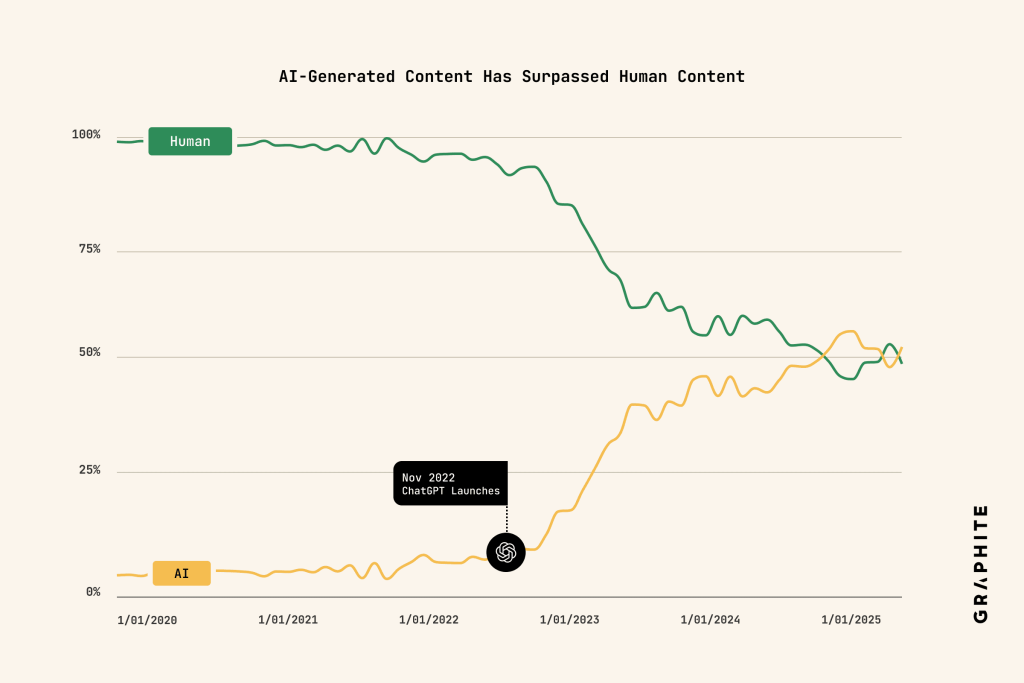

It might be a little early to say this out loud, but — the whole fiasco seems to have escalated too quickly. A report by Graphite titled “More Articles Are Now Created by AI Than Humans” almost reads like a moment of celebration. “It feels almost too emotional to say out loud — humans still need human writing!”

The study suggests that while AI-generated content has become widespread because of its low cost and improving quality, it rarely performs well in Google or ChatGPT results — hinting that human oversight and originality still matter.

Does the Graphite study prove anything?

Remember all those AI bros shouting their lungs out about how they used AI to churn out hundreds of blogs in record time. Or those LinkedIn posts where you had to leave some self-deprecating comment like “AI blog mastery” just to get access to their “framework” that supposedly saved them millions and made them billions — but they were giving it all away for free?

Whether or not they actually achieved what they claimed — one thing’s for sure: they were writing and publishing AI-generated blogs. I’m not talking about using AI to format, edit, or polish your original writing. I’m talking about completely AI-written content.

This is where Graphite’s study comes in. After all that AI-generated content being pumped out for search engines and LLMs to index, how much of the internet did it actually populate?

So, Graphite analyzed 65,000 English-language articles from CommonCrawl — and the researchers found that the number of AI-generated articles officially surpassed human-written ones in late 2024.

That means there’s now more content online created by machines than by people. Which feels like blasphemy.

But here’s the anticipated twist — the sheer volume of AI-generated content has actually stalled since mid-2024.

Why? Because, as Graphite suggests, many publishers discovered that AI content doesn’t perform well in Google Search or even in ChatGPT’s own retrieval results. In other words — sheer volume doesn’t translate to more eyeballs or conversions.

This study also makes you wonder — is it just that AI-generated writing isn’t good enough yet, or is this the beginning of a larger snowball effect leading toward an AI bubble?

There’s definitely an AI bubble — or is there?

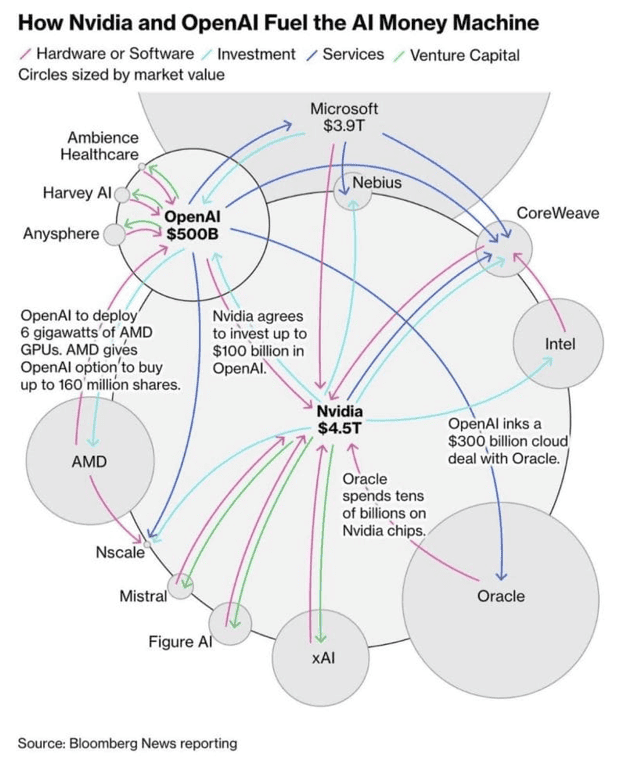

If you really want to understand what’s going on, here’s a diagram from Bloomberg that’s been making the rounds on the internet. It illustrates the complex web of financial, technological, and strategic relationships fueling the booming AI ecosystem — powered mainly by Nvidia and OpenAI.

It showcases how market leaders — Nvidia, OpenAI, Oracle, Microsoft, AMD, Intel, and several emerging AI startups — are interconnected through hardware supply, multi-billion-dollar investments, cloud services, and venture capital. The circle sizes represent market values, highlighting the dominance of giants like Nvidia ($4.5T), OpenAI ($500B), and Oracle.

That said, the most important insight. Nvidia has agreed to invest up to $100 billion in OpenAI, a move that allows OpenAI to use those funds to purchase Nvidia’s powerful AI chips and deploy roughly 10 gigawatts of Nvidia systems for its next-generation data centers.

At the same time, OpenAI struck a $300 billion cloud infrastructure deal with Oracle, even though its annual recurring revenue (ARR) is only about $13 billion. Oracle, in turn, will rely heavily on Nvidia’s GPUs to build and operate these massive AI data centers. This creates a self-reinforcing financial ecosystem in which Nvidia effectively funds its own chip demand through its customers.

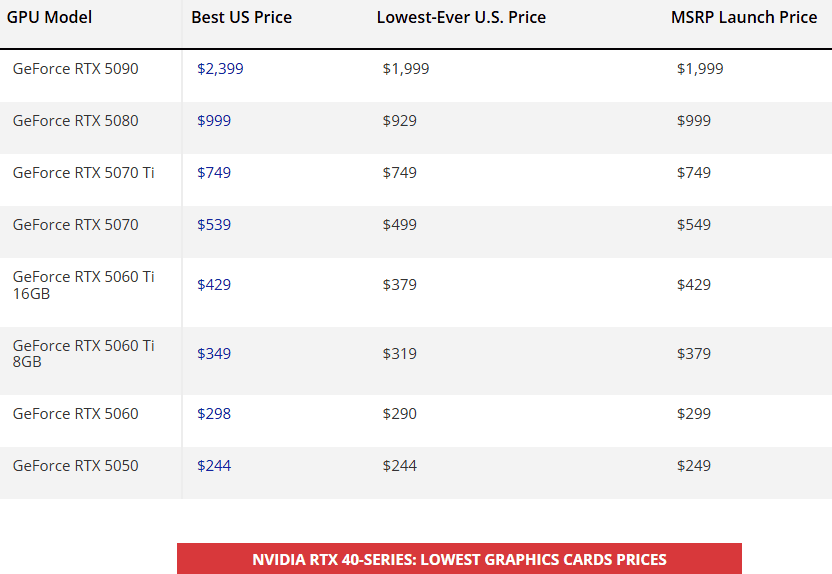

But other than this graph, If you look around, the signs are all there — money pouring into every AI startup with a half-decent pitch deck, tech valuations hitting ceilings, and GPU prices dropping like second-hand phones. Everyone’s building something “AI-powered,” whether or not they need to. It feels… familiar. Almost like déjà vu from the dot-com era — this time, just wrapped in machine learning jargon and GPU servers.

Financial reports from outlets like FT and Beinsure hint that the AI hype isn’t just about products or productivity anymore — it’s about money chasing money. GPU rental prices have plummeted, signaling oversupply, and investors are growing cautious. Some even say the AI market is showing classic bubble symptoms: inflated valuations, unsustainable demand, and too much optimism packed into too little proof.

And it’s not just hardware. On the content side, the flood of AI-written material has already hit a plateau. Graphite’s study practically confirmed that most of it doesn’t perform well. When both investors and publishers start realizing the returns don’t match the hype, you can almost hear the air hissing out of the bubble.

However, there seem to be multiple outcomes — not just the simplified version that there’s definitely a bubble and it’s going to pop any day now.

One perspective, shared by Mark Zuckerberg is that even if there is a bubble and companies might lose billions in investments, not investing at all is actually riskier. Meanwhile, Jeff Bezos mentioned in an interview that such bubbles don’t always mean bad news — because after the dust settles, a few strong companies remain, and they often end up benefiting humanity in the long run.

On the other hand, several finance experts agree that there is a bubble — but it might take another two to three years to pop.

The quiet return of human writing?

I think it was only about a month ago when people on LinkedIn stopped talking about how the em dash was supposedly a giveaway that something was AI-written — especially ChatGPT. And the reactions came from two kinds of folks: non-writers who thought the em dash was some new invention by GPT, and writers who were passionately defending their beloved punctuation.

As you can probably tell, I still love using the em dash — it’s my favorite. Oh and here’s one of my favorite videos on the em dash situation.

But the point here isn’t the em dash — it’s the whole package. Even if a sentence doesn’t start with something like “in an ever-changing digital landscape,” it can sound perfectly fine at first glance, but make no real sense when actually read.

AI also writes in a way that might open a loop that never closes, or feel stuffed with fluff without really saying anything. Not to mention, AI doesn’t truly know what it’s saying — and it can hallucinate which is a huge problem.

Talking about AI hallucinations — I think it’s somewhat normalized now. We double-check things if something seems off, like whether AI is pretending to read from the live internet even though that particular model doesn’t have access, or if it cites a stat that doesn’t exist.

But what if this takes an even darker turn on a much larger scale?

That’s exactly what happened when Deloitte Australia faced controversy this October 2025. A government-commissioned report worth A$440,000 was found to contain numerous AI-generated errors, including fabricated citations, false references, and even a bogus court quote.

In response, Deloitte agreed to refund a portion of the payment to the Australian government and issued a corrected report. This incident sparked significant debate around AI’s accountability, professional diligence, and the ethical use of AI in consulting.

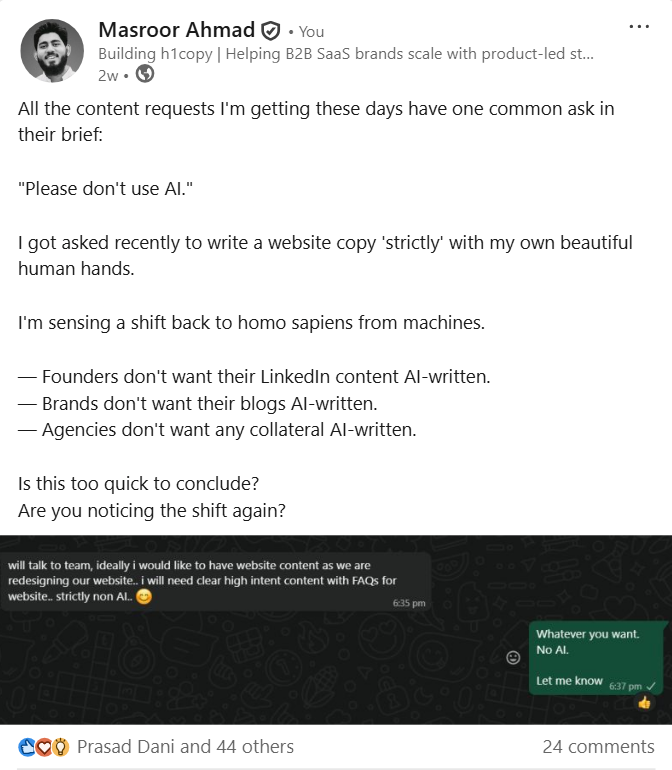

This is really bad news in terms of AI adoption. But it’s not just about hallucinations or consulting firms, it’s also about not wanting to sound soulless as a company. Here are a few examples.

Companies increasingly embed “No AI” clauses in contracts with their agencies to prevent the unauthorized use of AI tools in campaign content, aiming to protect their brand reputation and avoid AI-generated errors or soulless messaging.

Nintendo opposes AI-generated content in its creative output, emphasizing human creativity and protecting its intellectual property from AI use, which sets it apart from competitors embracing AI. It’s not just Nintendo, there’s Unsplash, Cadbury, PosterSpy and more.

It’s very easy to find such stories. One of the less surprising stories you’ll read is that companies are hiring humans again to fix AI generated content. AI has become unfashionable — cheap branding that lacks creativity. Brands don’t want to be associated with AI; using AI almost feels like promoting something morally wrong.

Stories like this are everywhere. One of the less surprising truths is that companies are turning back to humans to repair AI-generated content. AI has fallen out of favor — a hollow, soulless branding tool that lacks imagination. Brands shy away from it; and getting caught using AI now almost feels like endorsing something morally wrong.

The point I’m making is this: maybe people don’t really want AI — especially businesses trying to sound human and win trust through authentic storytelling. Incidents like these might push B2B SaaS companies to seriously rethink the kind of content they want to put out.

And it feels like the trend is slowly swinging back. Some freelancers are sharing fully booked calendars, while agencies are clarifying that they don’t write full content using AI — they just use it to streamline the process, which seems 100% fair by the way. Meanwhile, users are complaining that they don’t want AI-generated slop on their favorite platforms.

The human touch in your brand

After all this AI noise, it feels almost poetic that the real differentiator for brands in 2025 isn’t who uses AI better — it’s who sounds more human. Maybe this is an oversimplification, and we could have a discussion about using AI in the backend to support your tech versus using AI to actually talk as a brand.

But let’s dive into why brands shouldn’t use AI to talk to humans.

Lou Dubois, in his recent Inc. article “Why Being More Human Is Your Brand’s Biggest Asset in 2025 and Beyond,” puts it simply: modern business isn’t just about selling a great product anymore. It’s about showing up — with honesty, empathy, and a voice that doesn’t sound like it came from a prompt.

Dubois cites research showing how emotion drives 95% of purchase decisions — not price, not logic, but feeling. Think about that: people buy from brands that make them feel something. When AI content floods every feed, that emotional connection becomes the ultimate moat.

The article also shares examples of brand campaigns that proved this point — showing that brands are built by highlighting their human side. Campaigns like Dove’s “The Code,” which promised never to use AI-generated women in ads, struck a chord because they were real.

Patagonia’s raw honesty about its supply chain challenges, and Allbirds’ quiet transparency around its carbon footprint — all of it points to the same truth. People don’t fall in love with perfection; they connect with imperfection, sincerity, and purpose.

Where does h1copy stand?

Being a B2B SaaS content writing agency, we’ve written tons of long-form, product-led BOFU pieces. Why am I being so specific about the type of content written?

Well, you’d assume that the more technical, feature-heavy, button of the funnel a piece is in B2B SaaS, the easier it should be for an LLM to write a naturally flowing content for you.

Right? Well not entirely.

During my experimentation phase last year, I tried to come up with as detailed a prompt as I could while writing few pieces piece, but every single time, I’d run into these two major issues:

1. The sentence would feel way too mechanical for my taste and for the brands’.

2. No consistency or control over how two paragraphs under the same header sounded.

Both of these issues were fixable if I went back and forth refining the prompts + editing the writing myself. But this sucked my brain glucose and it was frustratingly time-consuming.

Not to mention it disrupted my overall writing flow — I’d almost forget where I was on the outline because I’ve spent way too much energy fixing a jumbled passage produced by AI.

Now this is part one of using AI to write, which I’m not a fan of and have mostly ignored even since. But there’s another part which most people don’t talk about, or are simply unaware of:

Making sense of information overload.

When you onboard a B2B SaaS brand, you expect yourself (in a good way) to get drowned in a sea of documents related to brand guidelines, product documentation, editor guidelines, detailed briefs, product demo, case studies, GTM sheet, positioning documents and so on.

And I love this. However, it will take a toll on your sanity if you try to:

Use all that information at once to come up with a features comparison table. Or write a very detailed section about a feature while trying to juggle between all the documents you need to collect the information from. Imagine more use cases like these.

Simply put: just dump everything you know about the brand into the AI, then ask questions, have it write a block of text, let it create a table, double-check details, and so on.

I’ve a few more practical cases but I’m mostly trying to draw a line between directly writing with AI or outsourcing your primary thinking vs AI-powered knowledge management…

This is where AI is great. Less creative and more technical.

However, you still can’t 100% close your eyes and trust AI outputs. Even for technical tasks, you need to double-check, because AI hallucinations can be expensive as we’ve seen in the Deloitte Australia controversy.

To be clear: I wouldn’t suggest using AI to write your blogs, LinkedIn posts, eBooks, white papers, or any kind of creative work. Where I do suggest — and personally use AI is for handling processes that take a lot of manual time, while still approaching the results with a healthy dose of skepticism.

P.S. If you want to know how to optimize your content for LLMs, check out: How To Write Long-Form Blogs + SME Insights.

What to make of all this?

There are nuances in the graphite study.

The researchers used SurferSEO’s AI detection algorithm to separate human and machine-written articles. Note that the study itself admits that there is no way of knowing if something is AI written or not and the popular AI detectors like Originality.ai, GPTZero, Grammarly, and Surfer might simply not work.

Their validation process showed a low false positive rate (around 4%) and a very high true positive rate (over 99%), lending some confidence to their numbers. However, they admit that detecting AI perfectly is nearly impossible, especially as models like GPT-4o continue to evolve and blend seamlessly with human writing.

It’s also worth noting that the study focuses strictly on “AI-generated” versus “human-written” content — not the hybrid gray area where most modern content actually lives. Maybe we’ll see something like that in the future, or maybe there won’t be any point in doing so — because if the content sounds great, does it really matter whether it was polished using AI?

Moreover, countless writers now use AI for ideation, outlining, or first drafts before rewriting by hand. Graphite acknowledges this as a major limitation: AI-assisted, human-edited work may be even more prevalent, but it’s far harder to classify or measure. I also fall into that category somewhat, but I think most writers would admit they exist somewhere in that messy middle.

So, does the study prove anything definitive? Not entirely. It proves that AI content is abundant and cheap to produce, but it also quietly suggests that human creativity, editing, and credibility still matter — especially when it comes to being found, read, and trusted.

I’d also make the point that who is using AI matters more than how they’re using it — which is much easier to master. Someone who is truly skilled at their craft will use AI to work faster, without letting it mask their own writing style.